Last year I sat down in a meeting room with a few other colleagues to learn from one of Atlassian's biggest Scala advocates about Scala. I kept on hearing good stuff about Scala, and I like trying new things, so I was eager to have my eyes opened so that I could learn how awesome this thing was. Unfortunately, I was disapponted. Most of the lesson went straight over my head. But to my dismay, the other people in the room seemed to get it. It was on this day that I realised an interesting thing about my learning style. I remembered back at uni, I was exactly the same in lectures... I never got anything I was taught in lectures. Which is probably why by the end of uni, I had pretty much stopped attending all CS lectures. It wasn't until I was given a real problem in a lab that I had to solve that I got it. This is why I never really got functional languages at uni, the lecturers that taught functional languages at my uni weren't the most practical of people. Actually, I don't think they ever even considered whether their work had any practical implications at all. So they taught things like map, reduce, filter, fold, curried functions etc like this "Look, here's something you've never heard of! Behold its awesomeness! Now here's a trivial mathematical use case with no apparent real world application! Look at how practical it is!"

Some people have no problems learning this way, and I envy them. For me, I need to have a problem that I understand and want to solve today, that the thing that I'm learning can solve, in order to learn it. So if you're reading this and thinking "That is so me!", and you want to learn Scala, then hopefully this is the blog post for you. I'm going to start with a practical problem that hopefully you will understand and want to solve, and then show you how three particular Scala features allow solving this really elegantly and simply. Hopefully after that, you'll have a good understanding of those features in Scala, and be able to understand a lot more of Scala code when you encounter it.

The problem

The problem that I want to solve is dependency management. If you've ever used maven, you'll know how verbose this can be. For those that haven't encountered maven, here's what specifying a single dependency looks like:

<dependency>

<groupId>net.vz.mongodb.jackson</groupId>

<artifactId>mongo-jackson-mapper</artifactId>

<version>1.4.1</version>

</dependency>

In English, this means a dependency on the artifact mongo-jackson-mapper version 1.4.1 from the organisation net.vz.mongodb.jackson. The English explanation is shorter than the code! Maybe the Maven authors could have made things nicer, by placing it on one line, and that would be clearer too, for example, something like this:

<dependency>net.vz.mongodb.jackson:mongo-jackson-mapper:1.4.1</dependency>

There are however some advantages to the more verbose model, the language (XML) that the DSL (maven pom.xml format) uses is able to fully express every element of the dependency, rather than the elements being defined in some other format XML is unaware of. There are also other attributes (such as scope and classifier) that the more verbose solution can easily unambiguously specify, these become very hard if it's just an arbitrary string of characters.

So here is the problem. I want a DSL to specify dependencies with as little noise as possible from the DSL, so that my dependency specification is short, unambiguous, and its format should be enforced by the language it's specified in. The language I'm going to use is Scala.

And just to ground this problem even more solidly in reality, this example is not contrived. SBT, the Scala Build Tool, uses Scala to specify its build configuration, including dependencies. The solution I'm going to show you is the solution that SBT uses. So by the end of this you will also understand a little about how to use SBT, which you will no doubt need to know as you delve into the world of Scala.

How would we do it with no weird Scala tricks?

First of all I will give a solution that uses language constructs that you should already be familiar with, using classes, fields, methods etc. If the following syntax makes no sense to you, you should probably do some reading on basic Scala syntax.

def groupId(groupId: String) = new GroupId(groupId)

class GroupId(val groupId: String) {

def artifact(artifactId: String) = new Artifact(groupId, artifactId)

}

class Artifact(val groupId: String, val artifactId: String) {

def version(version: String) = new VersionedArtifact(groupId, artifactId, version)

}

class VersionedArtifact(val groupId: String, val artifactId: String, val version: String) {

}

Here we have three classes, a GroupId, which has a method for creating an Artifact from that group id, which in turn has a method for creating a VersionedArtifact, which captures our dependency. There's also a factory method for creating the GroupId. To use it, we can do this:

groupId("net.vz.mongodb.jackson")

.artifact("mongo-jackson-mapper")

.version("1.4.1")

Already it's looking better than the XML version, but this could be done in Java too. Scala has a lot more to offer yet. As we go through the following lessons, it's important to remember that each change we make, we are still basically achieving the same as the above.

Lesson One: Scala methods can be made of operator characters

To Java developers such as myself, this is a bit weird, though in other languages this is quite common. There's some strict rules in Scala over exactly what a method name can be, but one of them is that it can be made of just operator characters (ie, +, =, *, /, %, -). So, the first change that we're going to make to our above code is to get rid of the method names. It makes things more concise, but other than that it may seem like a weird first step. Bear with me.

def groupId(groupId: String) = new GroupId(groupId)

class GroupId(val groupId: String) {

def %(artifactId: String) = new Artifact(groupId, artifactId)

}

class Artifact(val groupId: String, val artifactId: String) {

def %(version: String) = new VersionedArtifact(groupId, artifactId, version)

}

class VersionedArtifact(val groupId: String, val artifactId: String, val version: String) {

}

And so now our code looks like this:

groupId("net.vz.mongodb.jackson").%("mongo-jackson-mapper").%("1.4.1")

Lesson Two: Scala lets you call methods without dots or braces

There are some strict rules governing this, including what happens when obvious ambiguities arise, and you can read about that in your own research. Simply put, if a method has only one parameter, you can call it without using the dot or the braces, rather replace them with whitespace:

groupId "net.vz.mongodb.jackson" % "mongo-jackson-mapper" % "1.4.1"

This is called "infix" notation, which you may have also come across in other languages. We're looking much more concise now, yet the language is still enforcing the rules of what a dependency needs, and we still end up with a strongly typed VersionedArtifact. But we're not finished yet.

Lesson Three: Scala lets you implicitly convert any type to any other type

For me, this was the weirdest feature of Scala when I first learnt it. It sounded so cool and dangerous at the same time. Most languages, when you call a method on an object, if that method doesn't exist on that object, will throw an error. For the statically typed languages, this will be a compile error, for dynamic languages it will be a runtime error. However, Scala is a bit different. When it can't find a method on an object (at compile time, because Scala is statically typed), it has an extra step before it gives up with an error.

Scala has a concept of implicit methods (and fields, but right now let's only worry about methods). When a method that you call doesn't exist on the object that you're trying to invoke it on, it will check the current scope for any implicit methods that take the type of your object as an argument. If the implicit methods result has a method that matches the method you are trying to invoke, then Scala wraps your object in that implicit method call. Have I lost you? Let's look at some code:

implicit def groupId(groupId: String) = new GroupId(groupId)

So the only change I've made to the groupId method is that it is now implicit. When I define my dependency, I do this:

"net.vz.mongodb.jackson" % "mongo-jackson-mapper" % "1.4.1"

Notice that the call to groupId has completely disappeared. If at this point our newly learned Scala syntax is a bit overwhelming for you, maybe it would help to see what would happen if we applied the implicit rule first, before we got rid of our dots and braces, and before we changed our method names to percent signs:

"net.vz.mongodb.jackson".artifact("mongo-jackson-mapper").version("1.4.1")

What it looks like is that the String class has a method called artifact. But we know it doesn't, and the Scala compiler knows it doesn't. When it sees that, it says "are there any implicit methods available that accept a String as an argument, and return a type that has an artifact method?" And so it finds the groupId method, which matches that criteria, and converts the code to this:

groupId("net.vz.mongodb.jackson").artifact("mongo-jackson-mapper").version("1.4.1")

When I first learnt about this feature, I thought that's going to cause massive amounts of confusion. And, if used irresponsibly, it certainly can. But when used carefully, it provides a massive amount of power, for example, in creating DSLs like the one above, or for adding methods to third party/legacy libraries.

On a side note, Scala actually uses this to add functional methods to the Java collections API, by providing implicit conversion methods to convert Java collections to Scala collections. The confusion that could arise can be avoided by being careful to only import the implicit methods you need, where you need them. Scala allows you to put an import statement anywhere in code, so if you have one method in a class that needs to work with Java collections, you can import the implicit collection conversions into just that block of code. In the case of DSLs, it's usually the case that the use of these are separated from the rest of your code by normal encapsulation, so a DSL for accessing a database would only appear in a DAO, where you're expecting it to appear, so there would be no confusion.

Putting it into context

There is one last step, and that is to show what we actually do with the dependency we've declared. In maven, our XML ends up being part of the rest of the overly verbose POM file. In SBT, there is a file called build.sbt that contains snippets of Scala, which build up the configuration. Available to the snippets are certain variables, for example, libraryDependencies, which contain the configuration. So adding my dependency to my SBT project means adding a line to the build.sbt file, like this:

libraryDependencies += "net.vz.mongodb.jackson" % "mongo-jackson-mapper" % "1.4.1"

You may notice here that there is another use of infix operator named methods, += is a method defined in mutable collections for adding elements. Multiple dependencies can be added more tersely like this:

libraryDependencies ++= Seq(

"net.vz.mongodb.jackson" % "mongo-jackson-mapper" % "1.4.1",

"net.vz.mongodb.jackson" % "play-mongo-jackson-mapper" % "1.0.0"

)

So there we have it. We have a very terse way of specifying dependencies, that is strongly typed, and so validated at compile time. I hope that the problem I have presented and solved is a problem that you have understood and can relate to, and that you've understood how Scala can be used to help solve it, and that in learning how Scala helps solve it, you've learnt and understood some important features of the Scala language that you're now keen to apply to other problems that you have.

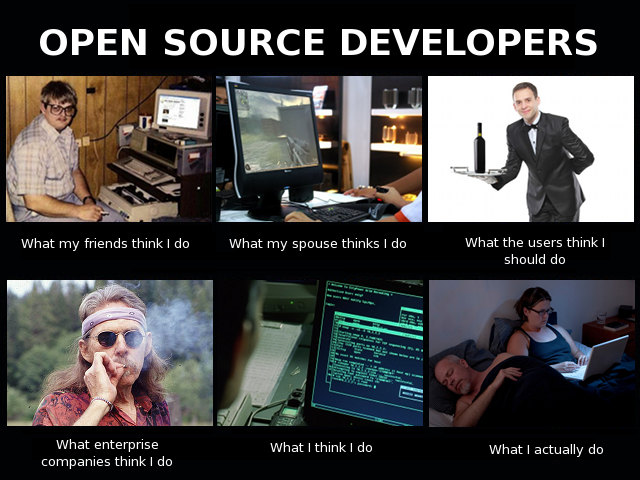

Hi! My name is James Roper, and I am a software developer with a particular interest in open source

development and trying new things. I program in Scala, Java, Go, PHP, Python and Javascript, and I work

for

Hi! My name is James Roper, and I am a software developer with a particular interest in open source

development and trying new things. I program in Scala, Java, Go, PHP, Python and Javascript, and I work

for